Mistrall Small 3 Eschews Synthetic Data - What Does This Mean?

Mistral AI has released Mistral Small 3, which claims to not use synthetic data in their training pipeline. Weighing in at 22B with 32k context, this is a lightweight model that can be run at full weights on an A40, and nearly any GPU spec that we offer while quantized. To understand why the lack of synthetic data is relevant, we need to go into what it is, and why a model trained on it may or may not be great for your use case.

What is Synthetic Data?

Synthetic data refers to artificially generated information that mimics the statistical properties and patterns of real-world data. In the context of LLM training, this can include automatically generated text, conversations, code, and other forms of language data created through various algorithmic approaches, including using existing AI models to generate training data for new models. Mind you, this does not mean that the data is simply taken as it is – you still have annotators and other forms of verification in the loop, but these methods are more going to be for fact checking purposes and standardization rather than significantly altering the form of the text.

The use of synthetic data in LLM training has grown significantly since 2022. As of writing, there are 1,108 text datasets on Huggingface listed as 'synthetic' – and these are only the datasets declaring it. The application of synthetic data to augment a dataset is often much higher. Gartner has predicted that synthetic data would comprise 60% of training data by 2024, up from 1% in 2021.

Notable examples of synthetic data usage include:

- DeepMind's approach to training AlphaCode, where they used large-scale generation of synthetic programming problems and solutions to augment their training data.

- Google's use of synthetic data to augment training datasets for specialized domains

- Microsoft's research into using synthetic data to improve code generation models, such as in its Phi series

Pitfalls and challenges

So it's clear that synthetic data has its uses, especially in domains where data may be difficult or even impossible to gather or collate. But are there are any downsides?

Quality and Authenticity Concerns

Synthetic data may not fully capture the nuances, irregularities, and complexity of real-world language use. This can lead to models that perform well on synthetic test data but struggle with actual user interactions. The generated data might also perpetuate patterns and biases present in the generation model itself. This could lead to a "Xerox of a Xerox" effect where the only the boldest parts of the signal survive the transfer while subtleties are lost. LLMs work by predicting the next most common token, while human language doesn't quite work the same way.

Human language generation, emerges from our ability to form and express original thoughts, emotions, and intentions. When we speak or write, we begin with a meaningful concept we wish to convey. We then actively construct language to communicate that meaning, drawing on our understanding of grammar, context, and shared human experiences. In other words, with humans the thought comes first, then the tokenization. LLMs have to tokenize without the benefit of that prior thought (because, well, they can't think.) Synthetic data, therefore, can lead to synthetic speech patterns, and this represents a problem with creative pursuits using LLMs.

Risk of Circular Or Incorrect Learning

When using existing AI models to generate training data for new models, there's a risk of creating an echo chamber effect where the new model simply learns to replicate the limitations and biases of the generating model. This can lead to a degradation of quality across generations of models. It can be also difficult to verify that synthetic data accurately represents real-world scenarios and edge cases. Organizations need robust validation processes to ensure that models trained on synthetic data will generalize effectively to real-world applications. There's a reason that you're admonished to check all LLM output for accuracy as they can give incorrect information. If this incorrect information winds up being taken as factual during training, it can be even more devastating as now it is part of the model and may be given much more frequently instead of a probabilistic one-off.

Lack of Real-World Context

It can be difficult to verify that synthetic data accurately represents real-world scenarios and edge cases. Organizations need robust validation processes to ensure that models trained on synthetic data will generalize effectively to real-world applications.

High and Low-Effectiveness Domains

Programming and code generation represent one of the most successful applications of synthetic data. The structured nature of programming languages, clear syntax rules, and well-defined patterns make it easier to generate high-quality synthetic examples. Companies can generate variations of coding problems and solutions that maintain semantic correctness while introducing meaningful diversity in implementation approaches.

Cultural and creative writing represents an area where synthetic data often falls short. The subtle cultural references, creative expression, and artistic elements that make writing engaging are difficult to replicate synthetically. Models trained primarily on synthetic creative content often produce generic or derivative outputs.

So, in short, the more structured and predictable your use case is, the more synthetic data is likely to be a benefit. There are conventions and rules that software engineering has decided on that people generally follow. The more unpredictable and spontaneous, the more likely it is to be a detriment - creative writing, poetry, etc. is where a model like Mistral Small 3 that doesn't use synthetic data is likely to shine.

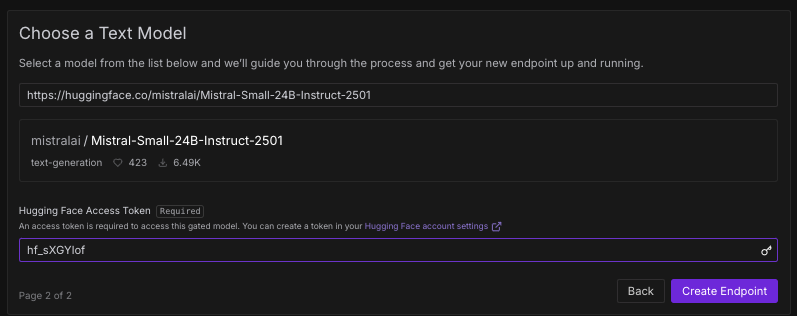

Running Mistral-Small-3 on RunPod

You can run Mistral-Small-3 in serverless by just going to your Serverless console, plugging in the HF path and your access token (since it's a gated model) and off you go.

If you'd rather run it into a pod, I'd recommend text-generation-webui as a good starting point for ease of use - we have a blog on how to deploy a model here.

Here's a table that shows how much VRAM that you would need for the model at various quant levels, along with the cheapest recommended GPU spec:

| Quantization Level | Memory Required | Minimum NVIDIA GPU |

|---|---|---|

| Full (FP16/BF16) | ~44 GB VRAM | A6000, A40 |

| 8-bit (INT8) | ~22-24 GB VRAM | A5000 |

| 6-bit | ~17-18 GB VRAM | RTX A4500 |

| 4-bit (INT4) | ~11-12 GB VRAM | RTX A4000 |

Conclusion

Synthetic data is incredibly useful as a training tool, but the question is - is it right for you? Despite a higher parameter count, you may find an LLM's output unsatisfactory compared to a smaller model like this one that doesn't contain synthetic data. Hopefully, this inspires some food for thought and helps you make the best decision for your GPU spend.