Groundbreaking H100 NVidia GPUs Now Available On RunPod

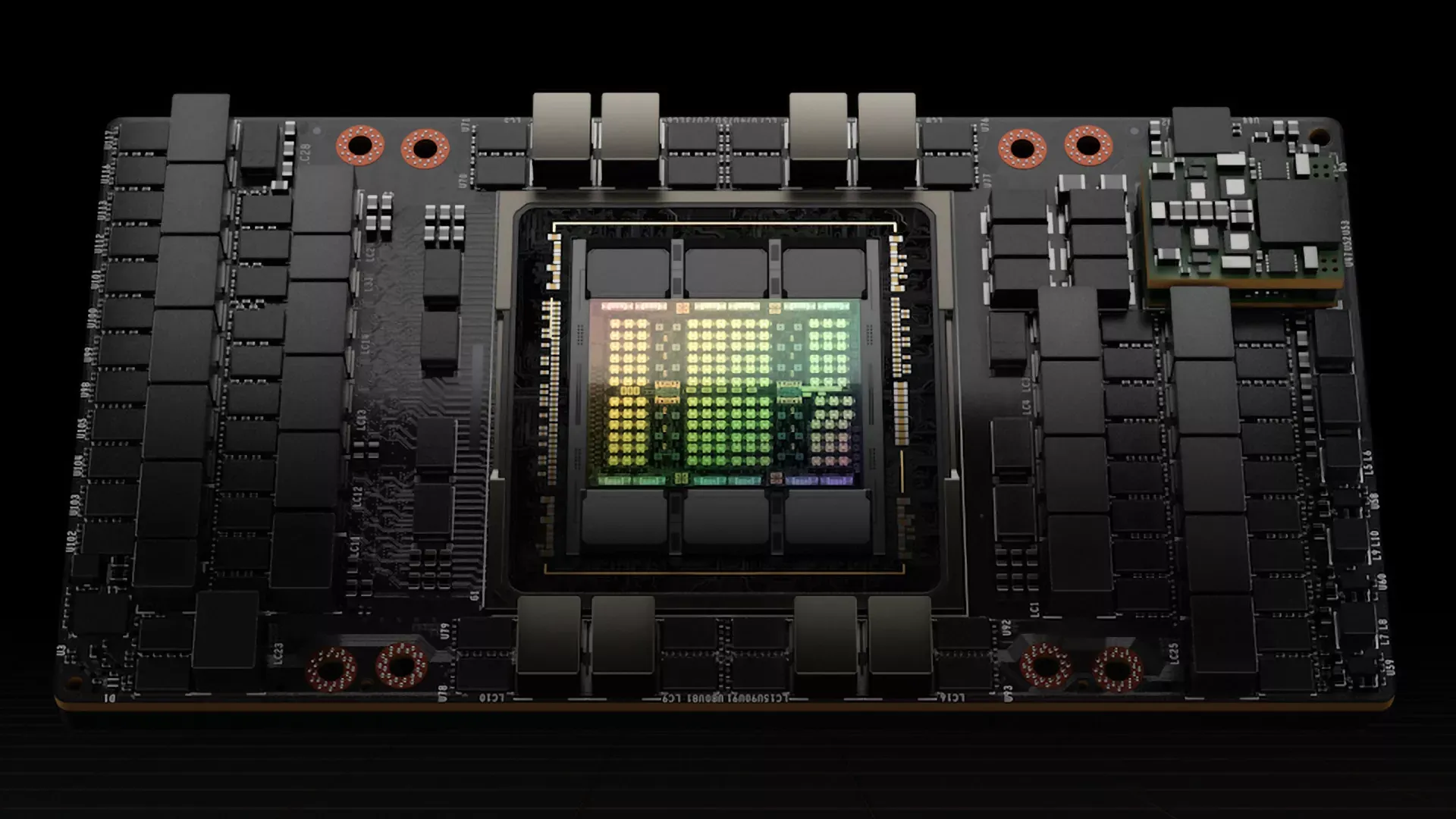

The demand for generative AI models continues to explode, as does the need for hardware capable of harnessing their ever-escalating performance requirements. While consumer-grade GPUs typically used for gaming are great for learning, tinkering, or hobbyist pursuits, as noted in our previous blog the computational demands can quickly outstrip the abilities of these cards, and enterprise-grade solutions are required to produce high-end solutions. In particular, VRAM in these consumer cards is often a limiting factor, and the cost per gigabyte can quickly spiral out of control which can make completing these tasks untenable. While RunPod already offers access to high-end solutions that are often out of the reach of many consumers such as the RTX 6000, A40, and A100, we are now pleased to also offer the H100, the latest generation in NVidia's large-scale AI GPU solutions. We're delighted to announce the launch of the H100 on RunPod—the most powerful GPU ever created for accelerating AI workloads. This game-changing addition to our lineup will revolutionize the way you leverage AI as an unbelievable tool to drive success in your organization.

Why the H100?

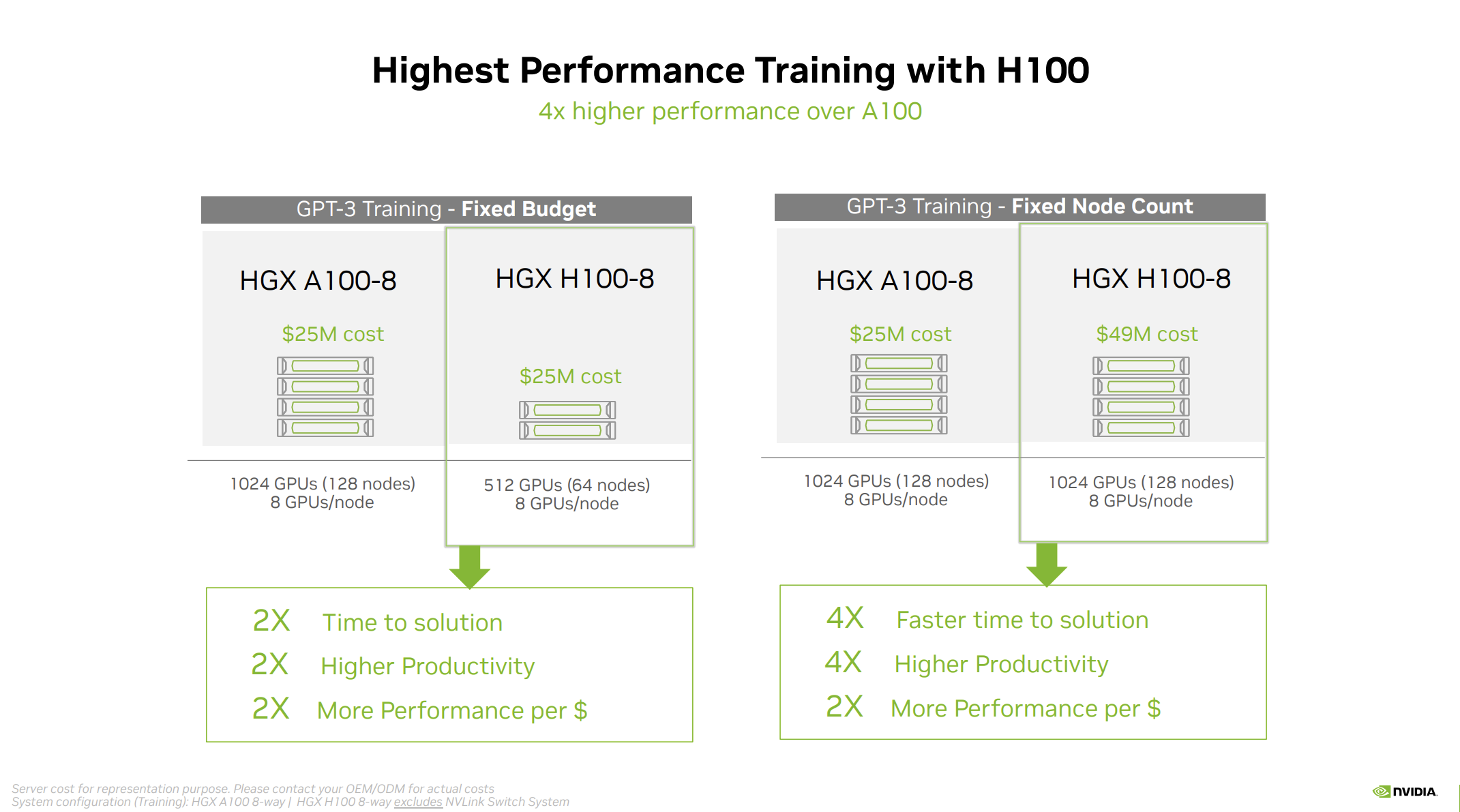

The H100 is already in use or planned to be used by some of the biggest names in AI for their solutions, including OpenAI, Meta, Stability AI, Mitsui, Johns Hopkins, and many others. The trend for AI models is that they are going to become larger and larger for the foreseeable future. For example, most commonly available consumer text generation models are between 6 and 50 billion parameters, and GPT-3 is 175, but GPT-4 is conservatively estimated to be up to 1 trillion. This requires hardware that not only has the raw computational power to shoulder the load, but is also able to do so in a responsible, sustainable manner. This means that not only will the job get done faster, but you'll also pay less per unit of compute in the process. The cost of training AI models continues to rise, and this is only going to be exacerbated by the parameter space growing at the pace that it is – so it's important to not only have solutions that get the job done faster, but also smarter.

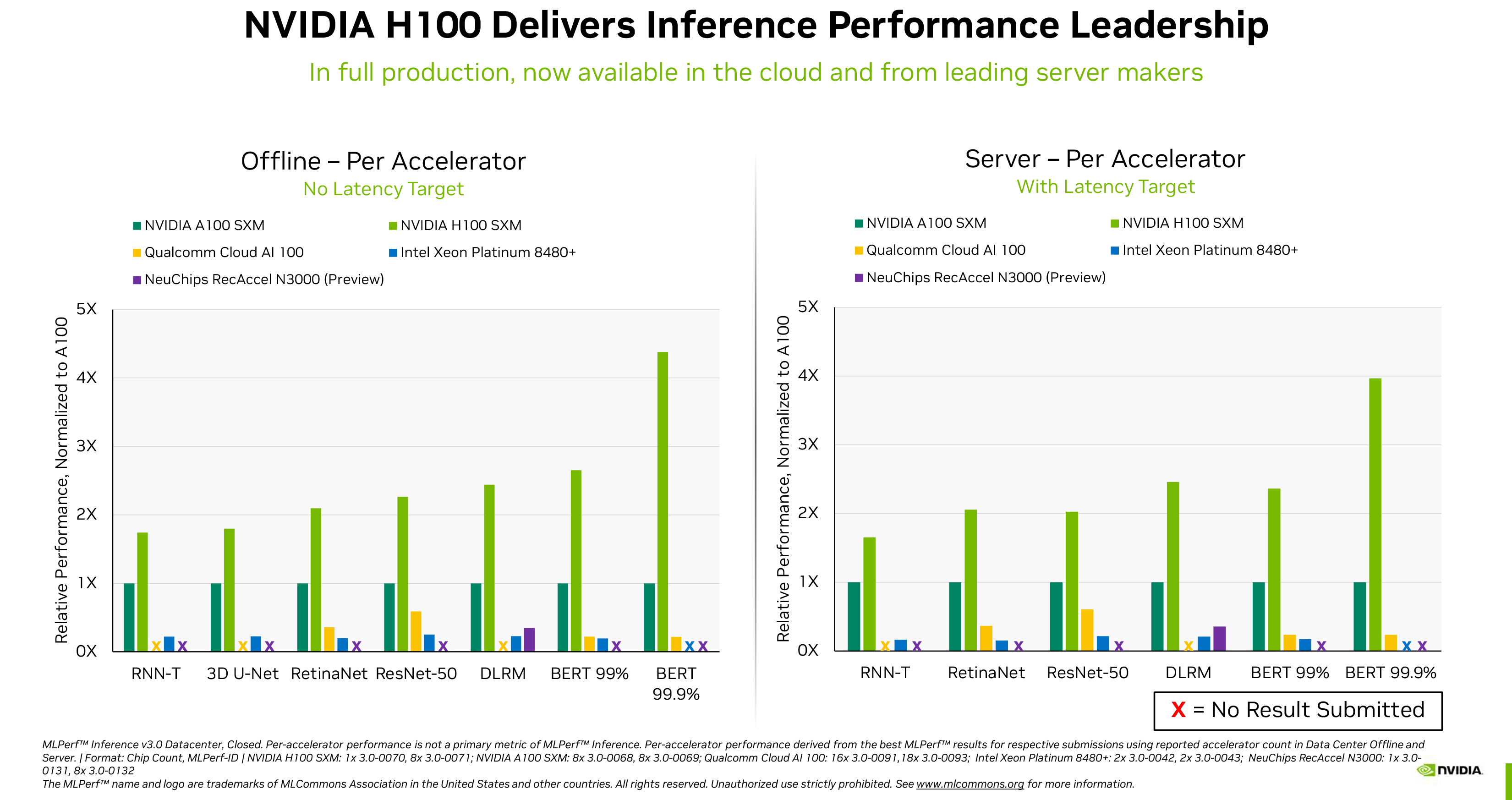

Compare the H100 performance to the A100 currently offered on RunPod; while the pricing for the H100 is to be determined, you should see an increase in performance several times over for what will likely be a nominal price increase in line with previous generational increases.

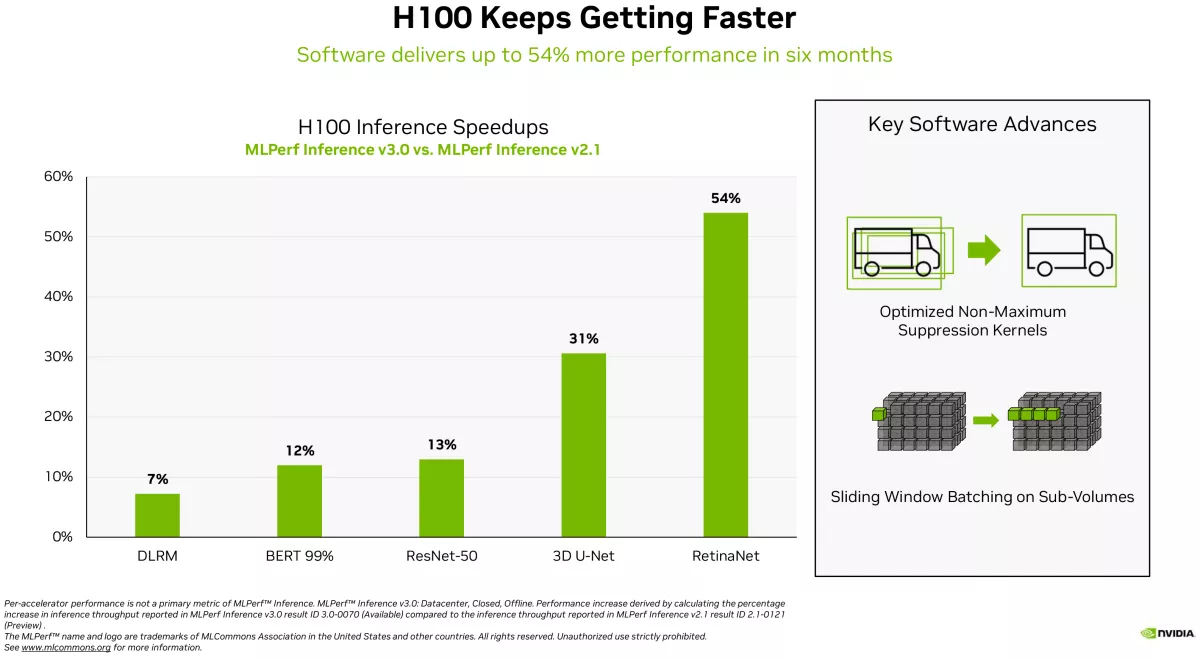

The H100 is also specifically optimized for generative AI processes on a level that has not yet been seen before, including large language models, recommendation models, and video and image generation. Depending on the use case, the H100 can have a performance gain of 7 to 12 times over the A100. Further, there are software optimizations available to only the H100 combined with the new Hopper architecture specifically built for AI inference that will further put it head and shoulders above the previous generations.

As of the launch of the H100, RunPod will offer the following server loadouts:

Down the road, plans for the H100 NVL are also in motion with an estimated launch by year-end of 2023.

Questions?

Due to anticipated high demand, RunPod is currently planning to offer the H100 on a reservation system. If you are interested in potentially accessing one of these pods, please reach out to us and submit your use case for consideration.