Why Altering the Resolution in Stable Diffusion Gives Strange Results

Stable Diffusion was originally designed to create images that are exactly 512 by 512. It does let you create images that are up to 2048 by 2048 (GPU resources permitting). However, when you try, you are very likely to get results that appear to be fighting against your prompt. Why is this?

The reason is that in any images that are larger than 512x512 pixels, it will create independent "cells" composed of 512x512 and overlay them until the entire image space is accounted for. It will apply the prompt to each individual cell within the image, and then converge the cells together. This process is pretty opaque to the user, and might throw a wrench into your plans unless you account for it.

Take an image generated by a prompt "instagram, detailed faces, three women standing in a field." Pretty standard prompt, and at 512x512 it shouldn't give you too much trouble:

However, even mildly altering the dimensions (in this case, 768x896) I'll get more people than what I asked for, and they'll be standing in odd places in relationship to each other.

The reason is that in each individual "cell", the image will make sense in the context of that particular cell. However, when all of these cells are stitched together, it is high unlikely that the cells will make sense in relation to each other. The people in each row look fine next to each other, but when the rows are placed next to each other, there is no sense of perspective and it makes the people in the back row look massive in comparison. Stable Diffusion has no concept of accounting for this sense of perspective, which is why you get the results that you get. If a person's limb extends past the line of the cell that they're in, it may just not render the limb at all. Obviously, this is an unpleasant, unnatural look.

However, depending on what you're generating, this may not matter. Creating a field without human subjects will look fine at nonstandard resolutions. You're probably not going to see patches of grass in the sky, for example.

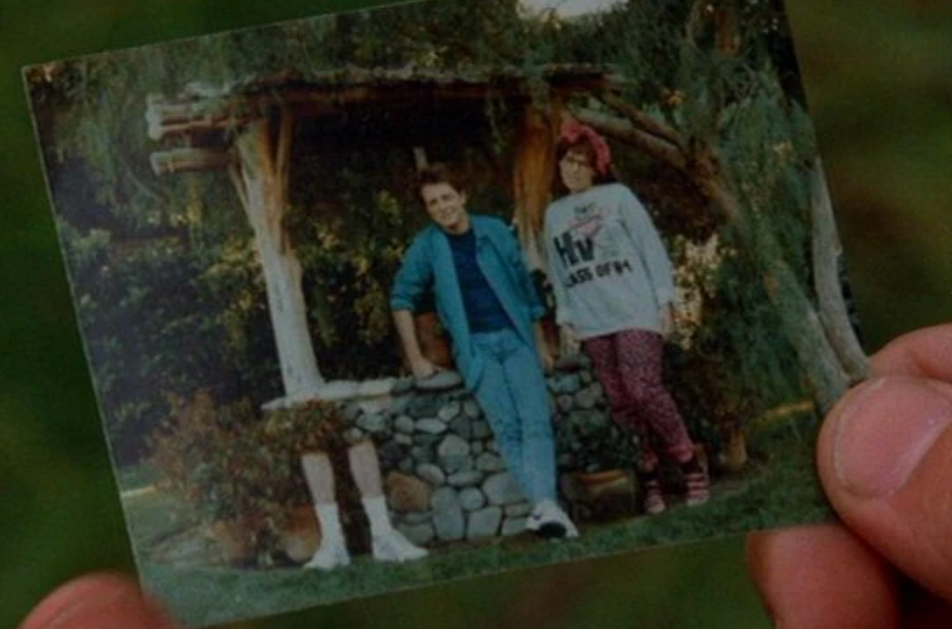

The reason why it's able to handle this instead is because something like a field is not a discrete object in the sense that a human being is. You can parcel out a field in uncountably many ways, so when Stable Diffusion needs to create cells to stitch the image together for the final result, it's impossible to tell where it did it. However, any segmentation of, say, a person beyond their naturally occurring boundaries is going to give you results like:

(Okay, that's just Back to the Future, but you'll run into the exact same problem in SD!)

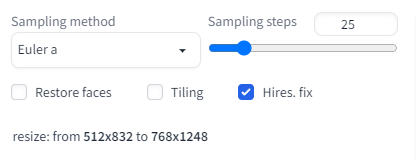

If you need to generate images that are larger than 512x512 where the subject being potentially bisected is a concern, then tick the "Hi-res fix" checkbox. This will, for a pretty hefty resource draw, allow you to create images that aren't subject to this limitation. However, if you need to create non-standard image sizes, it's going to be the best way rather than rolling the dice on the canvas size alone.

Have any further questions about Stable Diffusion best practices? Join the RunPod Discord and talk to us!