Streamline GPU Cloud Management with RunPod's New REST API

Managing GPU resources has always been a bit of a pain point, with most of the time spent clicking around interfaces with repetitive manual configuration. Our new API lets you control everything through code instead, which is great news for those who'd rather automate repetitive tasks and focus on the actual machine learning work. For those of you more familiar with our internal workings, this was known as GraphQL, and this will function much the same, but with several under-the-hood improvements to further streamline the process. Let's explore how this new API can transform your workflow and examine some practical examples of its capabilities.

Key Benefits of RunPod's REST API

Complete Programmatic Control

The new REST API provides end-to-end management capabilities for your GPU resources. From creating and configuring pods to managing serverless endpoints, everything can now be automated through simple HTTP requests. This eliminates the need for manual intervention through web interfaces, making it perfect for integration into CI/CD pipelines and automation workflows.

Flexible Resource Configuration

Whether you need GPUs for training large models or CPU resources for preprocessing tasks, the API offers granular control over your computational resources:

- Choose from a variety of GPU types (RTX 4090, A5000, A40, etc.)

- Configure CPU flavors and vCPU counts

- Specify memory requirements

- Set up persistent storage volumes

Global Deployment Options

The API allows you to specify data center locations across multiple regions, ensuring your workloads run close to your data or end-users, with support for both Secure Cloud and Community Cloud environments.

Cost Optimization

The API enables interruptible/spot instance deployment and management, helping you significantly reduce costs for non-time-sensitive workloads.

Practical Examples

Let's look at some real-world examples of how you can leverage this API.

Example 1: Creating a new pod

To create a new pod with an RTX 4090 GPU running PyTorch:

curl https://rest.runpod.io/v1/pods \

--request POST \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer YOUR_SECRET_TOKEN' \

--data '{

"gpuTypeIds": ["NVIDIA GeForce RTX 4090"],

"imageName": "runpod/pytorch:2.1.0-py3.10-cuda11.8.0-devel-ubuntu22.04",

"name": "my-pytorch-pod",

"env": {

"JUPYTER_PASSWORD": "secure-password"

},

"containerDiskInGb": 50,

"volumeInGb": 20,

"volumeMountPath": "/workspace",

"ports": ["8888/http", "22/tcp"]

}'

This will create a Pytorch pod on a 4090 GPU as requested.

Example 2: Creating a new serverless endpoint

For those looking to implement a cost-effective serverless architecture:

curl https://rest.runpod.io/v1/endpoints \

--request POST \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer rpa_TG1IX3V0HB2KN69ZKJE0W6104CNEV21HW2T9ZMII3gsa20' \

--data '{

"name": "object-detection-api",

"templateId": "ovz4ztpnq2",

"gpuTypeIds": ["NVIDIA GeForce RTX 4090"],

"scalerType": "QUEUE_DELAY",

"scalerValue": 4,

"workersMin": 0,

"workersMax": 5,

"idleTimeout": 5,

"executionTimeoutMs": 600000

}'

This will create an endpoint from a serverless template that was previously created.

Example 3: Monitoring and Scaling

Check the status of your pods and scale or stop them as needed.

# List all your pods

curl https://rest.runpod.io/v1/pods \

--header 'Authorization: Bearer YOUR_SECRET_TOKEN'

# Stop a specific pod when not needed

curl 'https://rest.runpod.io/v1/pods/your-pod-id/stop' \

--request POST \

--header 'Authorization: Bearer YOUR_SECRET_TOKEN'

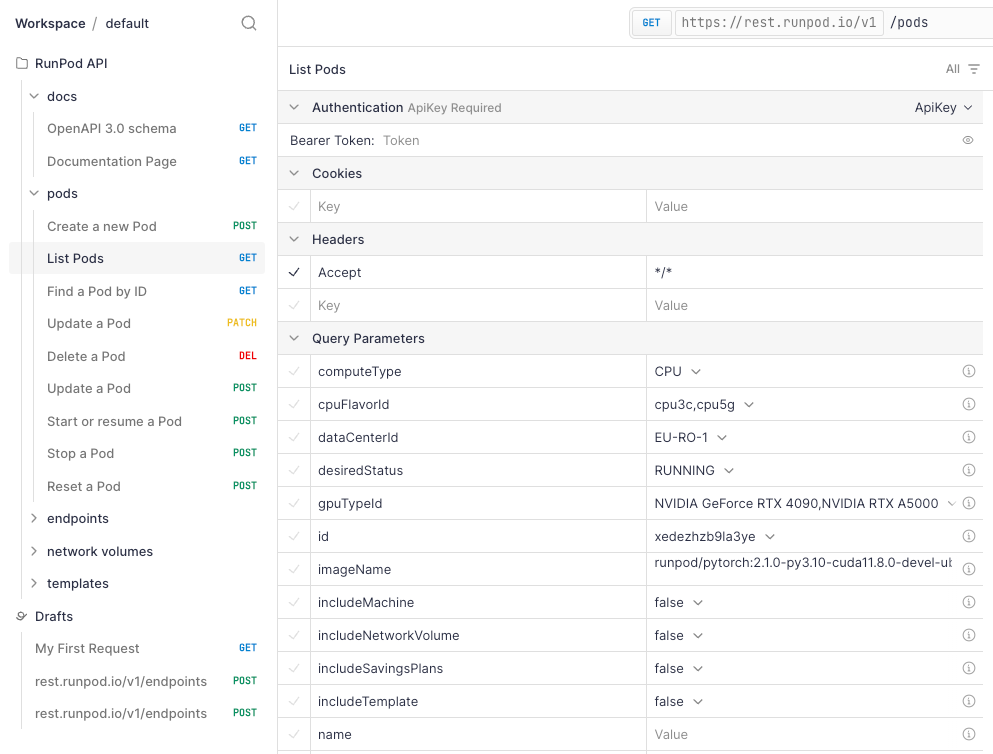

Example 4: Use the scalar.com UI

Go to the docs page for the REST API and click on “Open API Client” in the bottom left. This will provide a UI playground, coming pre-filled with several example requests that you can tinker with at your leisure:

Integration Possibilities

The API's flexibility opens up numerous integration scenarios:

MLOps Pipelines become much more fluid with this API. Instead of manually provisioning resources before each training run, you can code your pipeline to automatically spin up specific GPU configurations when jobs are queued. Once training completes, the resources can be released just as automatically. This removes a major bottleneck in ML workflows where data scientists often waste time waiting for infrastructure to be provisioned or remain idle.

Cost Management Systems gain new capabilities with programmatic control. You can write scripts that automatically shut down expensive GPU resources during nights and weekends when your team isn't actively working. Some organizations have reported 30-40% cost savings by implementing simple time-based pod management, especially for development environments that don't need to run 24/7.

Auto-scaling Web Services that use GPU acceleration for inference can now dynamically adjust to demand. Your application monitoring system can watch request queues or response times and trigger the creation of additional GPU pods or serverless workers during high-traffic periods. When demand subsides, extra instances can be gracefully shut down, ensuring you're only paying for what you need, when you need it.

Conclusion

RunPod's new REST API represents a significant step forward for organizations looking to bring infrastructure-as-code practices to their GPU cloud resources. By enabling programmatic control over every aspect of GPU resource management, it empowers developers and ML engineers to create more efficient, automated, and cost-effective workflows.

Whether you're a small team looking to automate repetitive management tasks or an enterprise implementing sophisticated MLOps pipelines, this API provides the flexibility and control needed to optimize your GPU infrastructure for modern AI development.