Mochi 1 Text-To-Video Represents New SOTA In Open Source Video Gen

Text-to-video generation is a space where open source has lagged behind for some time, due to the difficulty and cost involved in training and evaluating video as opposed to text and images. Offerings such as Sora, while impressive, beg for open-source alternatives where you can create videos of any kind with security and privacy. While Stable Video Diffusion and other alternatives help closed the gap somewhat, the newly released Mochi 1 by Genmo shoves the open source field that much closer and already appears to be the new state of the art in text-to-video inference.

So what can Mochi do?

According to their release, Mochi can can create videos following a text prompt with the following capabilities:

- 30 frames per second, up to 5.4 seconds

- 480p output resolution (preview version)

- Focus on photorealism and motion, with evaluators asked to focus on motion during the training process.

- Focuses on prompt adherence, allowing for detailed control over characters

We'll go through some examples of what Mochi can do, along with a quickstart guide so you can see for yourself.

Mochi compute requirements

The team behind Mochi advises that four H100s are required to use the system to its fullest capability. Fortunately, the workflow also scales just fine with smaller video requirements and you will be able to scale down your requirements to smaller hardware specs if necessary. Generations can be expected to take several minutes each at the model's maximum capacity, especially at high frame and step counts.

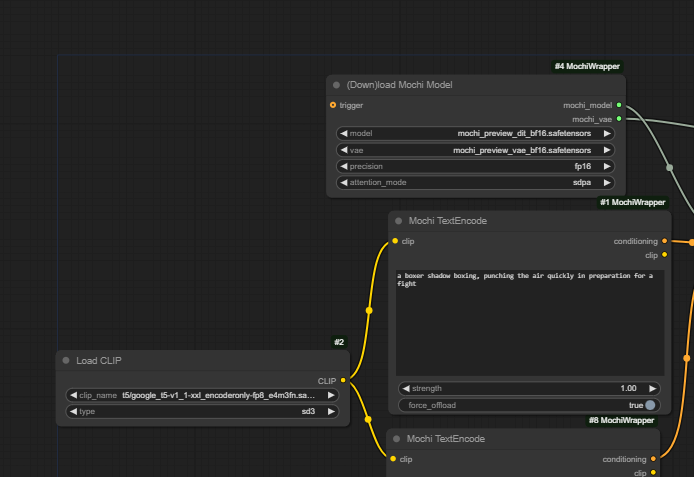

Fortunately, Camenduru has a ComfyUI workflow available that lets you experiment. As ComfyUI does not support GPU parallelism out of the box, you'll be limited to a single device, so you'll want to use the highest GPU spec available on a single card for this. For now, using the 94GB H100 NVL will let you eke out a bit more than the standard spec. Once the H200 launches with its 141 GB, that will likely become the ideal single GPU spec for this workflow.

As one GPU would just not normally be enough for this kind of work, the workflow uses VAE tiling to stitch together multiple generations to produce a final result. This approach addresses memory constraints and improves processing efficiency while maintaining image quality and coherence. Of course, this does come with some image quality tradeoffs along with potential frame skipping, but the workflow also opens up these parameters for adjustment. Nevertheless, it does enable you to generate videos at the full length with just one GPU, which should be a significant time savings as you explore how well it will fit for your use case.

prompt: "a boxer shadow boxing, punching the air quickly in preparation for a fight" (VAE tiling on)

prompt: "a boxer shadow boxing, punching the air quickly in preparation for a fight" (VAE tiling on)

You can disable enable_vae_tiling in the workflow to get around this, though you'll be pretty limited in the number of frames you can generate on a single H100 (realistically, to about 25 frames or so.) Again, the H200 will make this a bit more flexible once it launches for single-GPU use; I'd expect you to be able to get at least 2.5 seconds or so out of a single generation with tiling off.

prompt: a boxer shadow boxing, punching the air quickly in preparation for a fight (VAE tiling off)

You can see that disabling tiling greatly decreases the amount of artifacting. So the ideal workflow would be to leave tiling on while in the exploration phase of your use case, but seeing if disabling it is feasible when you are ready to go into production.

How to Get Started with Mochi on RunPod

- Spin up a Mochi 1 Preview by Camenduru pod with this template. Ensure to select an H100 pod for best results (ideally, an H100 NVL if you're trying to push the boundaries on what can done with a single GPU.)

- Download the ComfyUI workflow, save it somewhere, and then drag it to the pod window once it's booted up and you've connected.

- You may need to initialize the link to the model by tapping the clip_name arrow. You can also adjust the precision of the model (fp16 recommended for use on H100) and then enter your prompt.

Although the test prompt above was fairly shallow and still demonstrated good results, you can in fact get much more detailed with your prompts.

prompt: a side view of a boxer forcefully punching a heavy bag in preparation for a fight, in a dusty old gym with large windows that let the sunlight filter through, illuminating both the boxer and the bag with a theatric flair (VAE tiling off)

prompt: a side view of a boxer forcefully punching a heavy bag in preparation for a fight, in a dusty old gym with large windows that let the sunlight filter through, illuminating both the boxer and the bag with a theatric flair (VAE tiling off)

Other Inference Options

You've got other options to run Mochi on RunPod, of course. If you want to see it at its full level of capability, you can spin up a 4xh100 pod and create videos through the CLI or through Gradio as listed on the Github repo:

git clone https://github.com/genmoai/models

cd models

pip install uv

uv venv .venv

source .venv/bin/activate

uv pip install -e . --no-build-isolation

python3 -m mochi_preview.gradio_ui --model_dir "<path_to_downloaded_directory>"

python3 -m mochi_preview.infer --prompt "A hand with delicate fingers picks up a bright yellow lemon from a wooden bowl filled with lemons and sprigs of mint against a peach-colored background. The hand gently tosses the lemon up and catches it, showcasing its smooth texture. A beige string bag sits beside the bowl, adding a rustic touch to the scene. Additional lemons, one halved, are scattered around the base of the bowl. The even lighting enhances the vibrant colors and creates a fresh, inviting atmosphere." --seed 1710977262 --cfg_scale 4.5 --model_dir "<path_to_downloaded_directory>"

We are also working on a Better Mochi launcher to go along with our Better Forge/A1111/ComfyUI, to be launched shortly, watch this blog space for updates!

Conclusion

We're really excited to see what you create with Mochi 1! This is the fastest and easiest way to start creating videos on the service with just a simple text button and a few button clicks. Feel free to hop on our Discord to ask questions and show off your creations!