H200 Tensor Core GPUs Now Available on RunPod

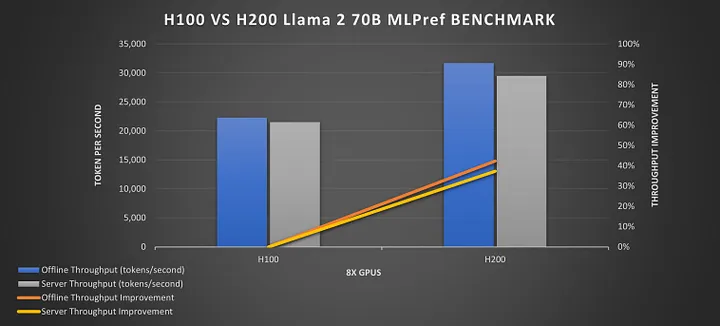

We're pleased to announce that H200 is now available on RunPod at a price point of $3.99/hr in Secure Cloud. This GPU spec boosts the available VRAM for NVidia-based applications up to 141GB in a single unit along with increased memory bandwidth.

Here are how the stats shake out compared to the H100 (compliments of Paul Goll on Medium):

| NVIDIA H100 | NVIDIA H200 | |

|---|---|---|

| Architecture | Hopper | Enhanced Hopper |

| Memory | 80 - 94GB | 141GB |

| Memory Bandwidth | 3.35 TB/s | 4.8 TB/s |

| Tensor Cores | 67 TFLOPS (FP64) 989 TFLOPS (TF32) 1,979 TFLOPS (FP16) |

67 TFLOPS (FP64) 989 TFLOPS (TF32) 1,979 TFLOPS (FP16) |

| NVLink Bandwidth | 900GB/s | 900GB/s |

| Power Consumption | Up to 700W | Up to 1000W |

Best uses for H200 GPUs

The stated primary use case for the H200 by NVidia itself is for large language models, and this is where the spec will truly shine, especially for models greater than 80GB. Not only will you get better performance, but at a cheaper price point. Let's take a model that would take ~280GB to host (405b Llama-3 or 405b Nous Hermes at 6-bit quantization):

| Cost per card | Units needed | Total cost per hour | H200 cost savings | |

|---|---|---|---|---|

| H100 SXM | $2.99/hr | 4 | $11.96 | $3.98 |

| H100 PCIe | $2.69/hr | 4 | $10.76 | $2.78 |

| H100 NVL | $2.79/hr | 4 | $11.16 | $3.18 |

| H200 | $3.99/hr | 2 | $7.98 |

The H200 will do the job for 66% of the cost and do a better job doing it, not only because of more horsepower in the spec itself, but also from the model being spread over fewer units, invoking less GPU overhead from parallel processing. Per GB of VRAM, the H200 is a better deal, at about 2.8 cents per GB compared to the 3.2-3.4 cents for H100 models.

H200s will also excel at tasks where parallelization may not be an option, such as in ComfyUI workflows. This will be very useful for video creation applications like Mochi that are cited as needing multiple H100s - previously, the 94GB available on H100 NVLs represented a sort of hard cap on how much you could work with in ComfyUI without outside of workarounds like SwarmUI. Here are some video templates and applications that would be great to try out with the H200:

We also plan to offer serverless capability for H200s in the very near future - keep watching this blog space for updates!