Create a custom AUTOMATIC1111 serverless deployment with your custom model

Have you ever wanted to create your own serverless AUTOMATIC1111 endpoint with a custom model that can scale up and down? Now you can do so without much hassle by following this guide!

Pre-requisites

A computer (local or cloud) with the following:

- docker installed

- git installed

- a relatively fast upload speed

- at least 100GB of free disk

- your custom model on the machine, or a public link to get the custom model

Note that you cannot currently build docker images on RunPod!

We will be using the example here as a base: https://github.com/runpod-workers/worker-a1111. You can absolutely write your own custom worker and do whatever you like with it, but we'll try to keep it as dead simple as possible in this tutorial.

Start by navigating to the directory you would like to work in, and then run a git clone on that repository:

git clone https://github.com/runpod-workers/worker-a1111.gitThis will create a directory called "worker-a111" in your current folder; you can then enter the directory with:

cd worker-a1111You should at least see the following files and folders:

- Dcokerfile (The instruction file that builds your docker image.)

- src/rp_handler.py (The handler file is the Python code that is ran as your worker.)

- src/start.sh (The start script is the script that is run when your container starts. It is responsible for invoking the handler and starting the automatic API internally.)

Now, if we want to replace the default model with a custom model, we need to do a few things. First, we need to delete the old model from the container image, then we need to add our custom model, and finally, we need to point the start script at the new model. All in all, it should require just a few lines of code changes!

If you have your model file locally:

I'll be using the civitai safetensors model from https://civitai.com/models/4823/deliberate in this example. If you've downloaded it locally, place it in the same directory and rename it model.safetensors. Then make the following change to your Docekrfile:

FROM alpine/git:2.36.2 as download

COPY builder/clone.sh /clone.sh

# Clone the repos and clean unnecessary files

RUN . /clone.sh taming-transformers https://github.com/CompVis/taming-transformers.git 24268930bf1dce879235a7fddd0b2355b84d7ea6 && \

rm -rf data assets **/*.ipynb

RUN . /clone.sh stable-diffusion-stability-ai https://github.com/Stability-AI/stablediffusion.git 47b6b607fdd31875c9279cd2f4f16b92e4ea958e && \

rm -rf assets data/**/*.png data/**/*.jpg data/**/*.gif

RUN . /clone.sh CodeFormer https://github.com/sczhou/CodeFormer.git c5b4593074ba6214284d6acd5f1719b6c5d739af && \

rm -rf assets inputs

RUN . /clone.sh BLIP https://github.com/salesforce/BLIP.git 48211a1594f1321b00f14c9f7a5b4813144b2fb9 && \

. /clone.sh k-diffusion https://github.com/crowsonkb/k-diffusion.git 5b3af030dd83e0297272d861c19477735d0317ec && \

. /clone.sh clip-interrogator https://github.com/pharmapsychotic/clip-interrogator 2486589f24165c8e3b303f84e9dbbea318df83e8

# --> HERE IS THE CHANGED LINE (REPLACES RUN wget) <--

ADD model.safetensors /

FROM python:3.10.9-slim

ENV DEBIAN_FRONTEND=noninteractive \

PIP_PREFER_BINARY=1 \

LD_PRELOAD=libtcmalloc.so \

ROOT=/stable-diffusion-webui \

PYTHONUNBUFFERED=1

SHELL ["/bin/bash", "-o", "pipefail", "-c"]

RUN apt-get update && \

apt install -y \

fonts-dejavu-core rsync git jq moreutils aria2 wget libgoogle-perftools-dev procps && \

apt-get autoremove -y && rm -rf /var/lib/apt/lists/* && apt-get clean -y

RUN --mount=type=cache,target=/cache --mount=type=cache,target=/root/.cache/pip \

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

RUN --mount=type=cache,target=/root/.cache/pip \

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git && \

cd stable-diffusion-webui && \

git reset --hard 89f9faa63388756314e8a1d96cf86bf5e0663045 && \

pip install -r requirements_versions.txt

COPY --from=download /repositories/ ${ROOT}/repositories/

COPY --from=download /model.safetensors /model.safetensors

RUN mkdir ${ROOT}/interrogate && cp ${ROOT}/repositories/clip-interrogator/data/* ${ROOT}/interrogate

RUN --mount=type=cache,target=/root/.cache/pip \

pip install -r ${ROOT}/repositories/CodeFormer/requirements.txt

# Install Python dependencies (Worker Template)

COPY builder/requirements.txt /requirements.txt

RUN --mount=type=cache,target=/root/.cache/pip \

pip install --upgrade pip && \

pip install --upgrade -r /requirements.txt --no-cache-dir && \

rm /requirements.txt

ARG SHA=89f9faa63388756314e8a1d96cf86bf5e0663045

RUN --mount=type=cache,target=/root/.cache/pip \

cd stable-diffusion-webui && \

git fetch && \

git reset --hard ${SHA} && \

pip install -r requirements_versions.txt

ADD src .

COPY builder/cache.py /stable-diffusion-webui/cache.py

RUN cd /stable-diffusion-webui && python cache.py --use-cpu=all --ckpt /model.safetensors

# Cleanup section (Worker Template)

RUN apt-get autoremove -y && \

apt-get clean -y && \

rm -rf /var/lib/apt/lists/*

RUN chmod +x /start.sh

CMD /start.shThe line ADD model.safetensors / will add your model file to the container image.

If you want to download it during the build process from a public link:

Alternatively, if you would rather have docker download the model from the internet, you can use a RUN wget command instead of an ADD command like follows:

FROM alpine/git:2.36.2 as download

COPY builder/clone.sh /clone.sh

# Clone the repos and clean unnecessary files

RUN . /clone.sh taming-transformers https://github.com/CompVis/taming-transformers.git 24268930bf1dce879235a7fddd0b2355b84d7ea6 && \

rm -rf data assets **/*.ipynb

RUN . /clone.sh stable-diffusion-stability-ai https://github.com/Stability-AI/stablediffusion.git 47b6b607fdd31875c9279cd2f4f16b92e4ea958e && \

rm -rf assets data/**/*.png data/**/*.jpg data/**/*.gif

RUN . /clone.sh CodeFormer https://github.com/sczhou/CodeFormer.git c5b4593074ba6214284d6acd5f1719b6c5d739af && \

rm -rf assets inputs

RUN . /clone.sh BLIP https://github.com/salesforce/BLIP.git 48211a1594f1321b00f14c9f7a5b4813144b2fb9 && \

. /clone.sh k-diffusion https://github.com/crowsonkb/k-diffusion.git 5b3af030dd83e0297272d861c19477735d0317ec && \

. /clone.sh clip-interrogator https://github.com/pharmapsychotic/clip-interrogator 2486589f24165c8e3b303f84e9dbbea318df83e8

# --> HERE UPDATE THE MODEL ID TO MATCH <--

RUN wget -O /model.safetensors https://civitai.com/api/download/models/4823

FROM python:3.10.9-slim

ENV DEBIAN_FRONTEND=noninteractive \

PIP_PREFER_BINARY=1 \

LD_PRELOAD=libtcmalloc.so \

ROOT=/stable-diffusion-webui \

PYTHONUNBUFFERED=1

SHELL ["/bin/bash", "-o", "pipefail", "-c"]

RUN apt-get update && \

apt install -y \

fonts-dejavu-core rsync git jq moreutils aria2 wget libgoogle-perftools-dev procps && \

apt-get autoremove -y && rm -rf /var/lib/apt/lists/* && apt-get clean -y

RUN --mount=type=cache,target=/cache --mount=type=cache,target=/root/.cache/pip \

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

RUN --mount=type=cache,target=/root/.cache/pip \

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git && \

cd stable-diffusion-webui && \

git reset --hard 89f9faa63388756314e8a1d96cf86bf5e0663045 && \

pip install -r requirements_versions.txt

COPY --from=download /repositories/ ${ROOT}/repositories/

COPY --from=download /model.safetensors /model.safetensors

RUN mkdir ${ROOT}/interrogate && cp ${ROOT}/repositories/clip-interrogator/data/* ${ROOT}/interrogate

RUN --mount=type=cache,target=/root/.cache/pip \

pip install -r ${ROOT}/repositories/CodeFormer/requirements.txt

# Install Python dependencies (Worker Template)

COPY builder/requirements.txt /requirements.txt

RUN --mount=type=cache,target=/root/.cache/pip \

pip install --upgrade pip && \

pip install --upgrade -r /requirements.txt --no-cache-dir && \

rm /requirements.txt

ARG SHA=89f9faa63388756314e8a1d96cf86bf5e0663045

RUN --mount=type=cache,target=/root/.cache/pip \

cd stable-diffusion-webui && \

git fetch && \

git reset --hard ${SHA} && \

pip install -r requirements_versions.txt

ADD src .

COPY builder/cache.py /stable-diffusion-webui/cache.py

RUN cd /stable-diffusion-webui && python cache.py --use-cpu=all --ckpt /model.safetensors

# Cleanup section (Worker Template)

RUN apt-get autoremove -y && \

apt-get clean -y && \

rm -rf /var/lib/apt/lists/*

RUN chmod +x /start.sh

CMD /start.shNow set the model using the start flags in automatic:

The only other thing to do is to edit the start script to point to the correct model file. In this case, we have stored our model as /model.safetensors, so so no change is required, if your model is named something different or in a different folder you will update /model.safetensors to match:

#!/bin/bash

echo "Worker Initiated"

echo "Starting WebUI API"

python /stable-diffusion-webui/webui.py --skip-python-version-check --skip-torch-cuda-test --no-tests --skip-install --ckpt /model.safetensors --lowram --opt-sdp-attention --disable-safe-unpickle --port 3000 --api --nowebui --skip-version-check --no-hashing --no-download-sd-model &

echo "Starting RunPod Handler"

python -u /rp_handler.pyAt this point, you are ready to build your docker image. You can do so by running a command like this:

sudo DOCKER_BUILDKIT=1 docker build .After you have built your image, you can push it to your favorite container registry. For docker hub you can do the following:

Getting an image to Docker Hub

Imagine you made your own Docker image and would like to share it with the world you can sign up for an account on https://hub.docker.com/. After verifying your email you are ready to go and upload your first docker image.

- Log in on https://hub.docker.com/

- Click on Create Repository.

- Choose a name (e.g. automatic-custom) and a description for your repository and click Create.

- Log into the Docker Hub from the command line

docker login --username=yourhubusername --email=youremail@company.comjust with your own user name and email that you used for the account. Enter your password when prompted. If everything worked you will get a message similar to

WARNING: login credentials saved in /home/username/.docker/config.json

Login Succeeded- Check the image ID using

docker imagesand what you will see will be similar to

REPOSITORY TAG IMAGE ID CREATED SIZE

automatic-custom 1.0.0 023ab91c6291 3 minutes ago 9.975 GB

and tag your image

docker tag bb38976d03cf yourhubusername/automatic-custom:1.0.0The number must match the image ID and :1.0.0is the tag. In general, a good tag choice will help you understand what this container should be used in conjunction with or what it represents. In this case, it's the first version, so we tag it 1.0.0

- Push your image to the repository you created

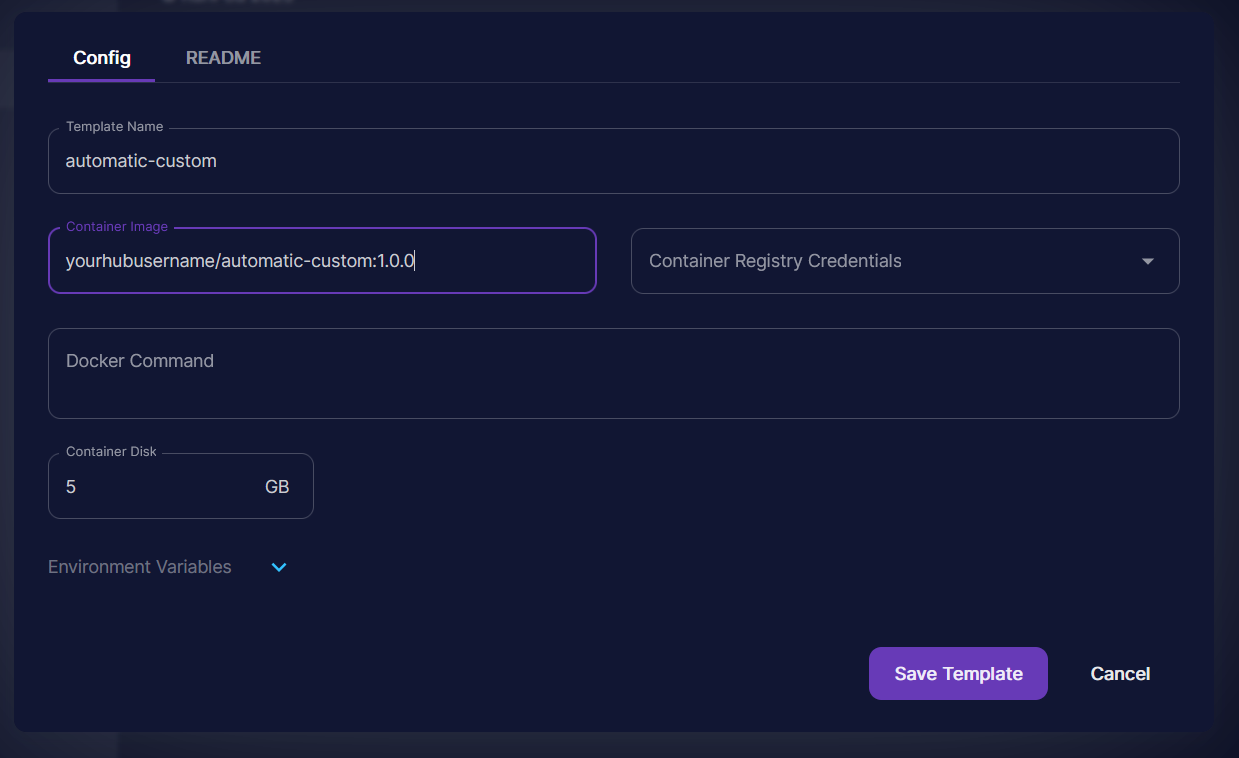

docker push yourhubusername/automatic-custom:1.0.0Your image is now available for everyone to use, and you can add it to your template like so by creating a new template and filling it out with the container image name you just pushed:

You can then use your template in your API by selecting it from the dropdown menu.

At this point, you can follow the documentation for how to deploy your API and use it:

https://docs.runpod.io/serverless-ai/custom-apis/autoscaling

https://docs.runpod.io/serverless-ai/custom-apis/using-your-api

Final Thoughts

While using the AUTOMATIC1111 API is convenient because it has a lot of built-in functionality, there are a few things to consider.

Firstly, the cold start time for this API is about 15 seconds, vs 10 seconds for a raw diffusers-based worker. Automatic1111 does take a bit of time to go through its internal checks as well as starting the uvicorn API that they use internally.

Secondly, because the API has so much functionality, it's challenging to make sure that every piece of it works, so be aware!

Other than that, it's a really great way to get started without having to define a bunch of your own standards or rolling your own code base. Happy hunting!