The Complete Guide to Training Video LoRAs: From Concept to Creation

Learn how to train custom video LoRAs for models like Wan, Hunyuan Video, and LTX Video. This guide covers hyperparameters, dataset prep, and best practices to help you fine-tune high-quality, motion-aware video outputs.

Video generation models like Wan, Hunyuan Video, and LTX Video have revolutionized how we create dynamic visual content. While these base models are impressive on their own, their full potential is unlocked through Low-Rank Adaptation (LoRA) — a fine-tuning technique that allows you to customize outputs for specific subjects, styles, or movements without the computational burden of full model training.

This guide demystifies the process of training video LoRAs, breaking down the essential hyperparameters, technical workflows, and practical considerations that determine success. Whether you're looking to create character-focused LoRAs, style adaptations, or specialized motion patterns, understanding these fundamental concepts will help you navigate the complexities of video LoRA training and avoid common pitfalls that lead to wasted computation time and suboptimal results.

From dataset preparation to final inference, we'll explore the critical decisions that influence training outcomes and provide practical recommendations based on real-world experience with these cutting-edge models. Let's dive into the technical details that make the difference between a mediocre LoRA and one that consistently produces high-quality, targeted video outputs.

Training Procedure

Currently, the best package to begin your training is diffusion-pipe by tdrussell. We have a more in depth guide on this here - but here is a quick tl;dr.

This guide documents the technical implementation of tdrussell's diffusion-pipe for training custom LoRAs compatible with Wan, Hunyuan Video, and LTX Video models. The process requires a machine with at least 48GB VRAM (80GB for larger datasets) and follows these core steps:

The implementation begins with cloning the diffusion-pipe repository and installing dependencies including git-lfs. Required model files (transformer, VAE, LLM, and CLIP) must be downloaded to a designated models directory. The training configuration is managed through .toml files, with key paths pointing to model weights and dataset locations. Finally, you provide a dataset of images and videos, with captions in .txt files for each corresponding file.

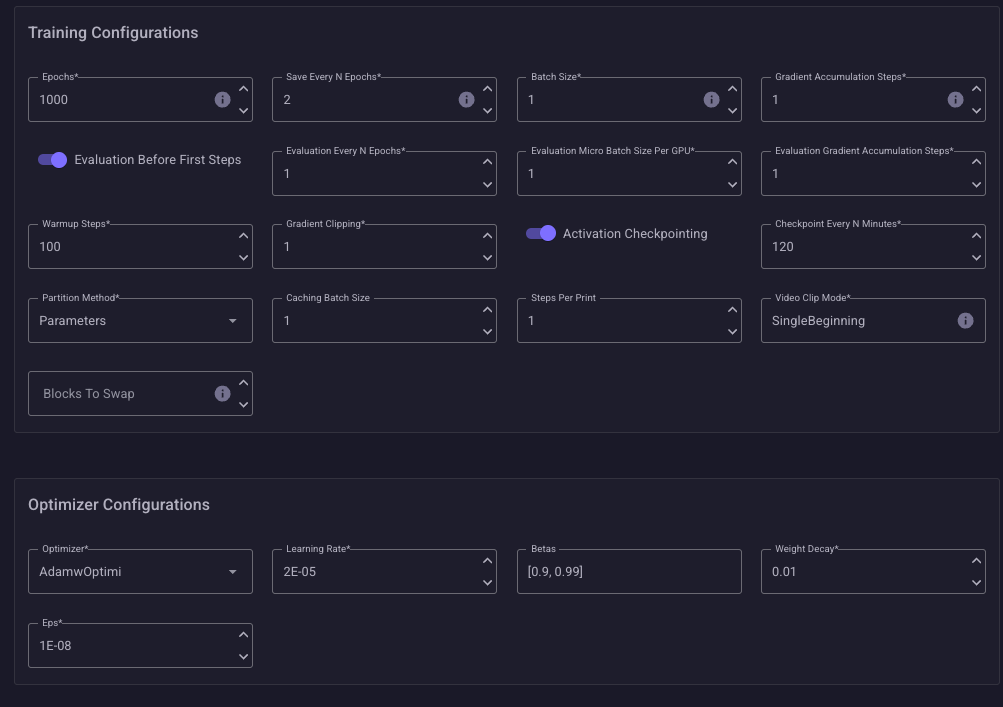

If you'd prefer a UI instead of editing .toml files, I'd recommend the template alissonpereiraanjos/diffusion-pipe-new-ui:latest if you just want to dive straight in.

It's possible to train on images, videos, or a mix of both. Training exclusively on images provides better computational efficiency, allowing for higher batch sizes and faster iterations, while enabling access to vast libraries of high-quality, diverse reference material; however, this approach struggles to capture temporal relationships, motion coherence, and dynamic lighting changes crucial for realistic video generation. Conversely, training solely on videos excels at learning natural motion patterns, temporal consistency, and dynamic scene evolution, but demands significantly more computational resources, often yields smaller effective datasets due to hardware constraints, and may require extensive preprocessing to handle variable framerates and clip lengths.

It's also extremely important to be sure you generate captions that are accurate—generating captions for images is trivial and has been around forever with CLIP. Your videos, however, will be automatically truncated to ~4-5 second clips (depending on the model) so this may require significant editing and manually examining files to be sure that what you believe you are providing during training and what the training process actually sees are in sync. For example, if you provide a caption of a specific arm motion, and the video is 6 seconds, what the model may see in the second clip is only the tail end of it, despite being provided the same caption – which will affect the model's understanding of the motion in a way you might not have intended.

A hybrid approach combining both modalities potentially offers the best compromise: leveraging images for concept variety and visual fidelity while using videos to establish temporal dynamics, though this introduces additional complexity in balancing dataset weights to prevent favoring either static or dynamic features. The optimal choice ultimately depends on your specific objectives—image-only training works well for style transfer and character appearance, video-only suits motion styles and dynamic effects, while mixed training serves well-rounded applications requiring both visual quality and temporal coherence. Practically, what is common is offering multiple versions of the same LoRA

Steps/Epochs

Steps are simply reflective of the amount of time (and by extension, GPU cost) spent training your LoRA, and an epoch is how long it takes for the training process to iterate completely over your dataset. This is impacted by rank, dataset size, and learning rate to varying degrees.

Ideally, you'll want to simply checkpoint every so many epochs and try to generate your desired subject as you go. You can save your checkpoints as they are created, so you can always return back to a previous point.

When testing out your LoRA, it's recommended to use the same seed and continue to generate with the same prompt so you can see how close you are getting to your desired result. Be aware that progress is not likely to be linear, and in fact you may even see it regress between checkpoints. An easy way to see if you are on the right track is to increase the strength of the LoRA during a trial run, though. Although you're likely to see increasing artifacts once the strength goes beyond 2, you can still look for your desired subject in the output, and if increasing the strength shows a closer match despite the artifacting, it's likely you're on the right track and the LoRA just needs some more time in the oven. There's not a hard limit on how far you can continue to train, but just some things to keep in mind:

- Overtraining will not compensate for poorly set hyperparameters elsewhere – throwing money at the problem isn't a solution

- You will generally get diminishing returns past a certain point

- It's possible to get stuck in local minima through extensive training where further training is not likely to help

Learning Rate

Learning rate is one of the most critical hyperparameters when training a LoRA. It determines how quickly your model adapts to the training data and ultimately affects both the training speed and the quality of your final results.

A learning rate that's too high will cause your model to take large, erratic steps during training, potentially "jumping over" the optimal solution and never converging. You'll see this in practice when your training loss fluctuates wildly or even increases over time. Too low a learning rate, and your model will take tiny, overly cautious steps, requiring an excessive number of training steps to reach a good solution - or worse, getting stuck in suboptimal local minima early in training.

For LoRA training specifically, learning rate typically falls between 1e-4 (0.0001) and 1e-6 (0.000001), with most successful public LoRAs using values around 1e-5 (0.00001) as a reasonable starting point. But this can vary dramatically depending on several factors:

- Dataset size: Larger datasets generally work better with lower learning rates, as each update is more statistically reliable

- Rank: Higher rank LoRAs often benefit from slightly lower learning rates to prevent instability

- Base model: Different base models may require different learning rate ranges based on their architecture and pretrained weights sensitivity

- Training objective: More complex transformations might require more conservative learning rates

Many experienced LoRA trainers have found that combining a lower learning rate with a longer training schedule produces superior results compared to higher learning rates with shorter schedules. The model adapts more slowly but more thoroughly to the nuances in your dataset.

Learning rate schedulers can also play an important role. Techniques like cosine decay (where the learning rate gradually decreases over training) or warmup periods (where the learning rate starts very small and increases to the target rate) have proven effective for LoRA training. A common approach is to start with a small warmup period (around 5-10% of total steps) followed by a gradual decay for the remainder of training.

When troubleshooting poor LoRA performance, learning rate is often the first hyperparameter you should adjust. If your LoRA seems to be ignoring aspects of your training data or producing generic results, try reducing the learning rate and training for more steps. If training progress seems too slow or stalls early, a modest increase might help.

Rank

Rank can be described as a general 'capacity' for how much a LoRA can affect the outputs of a base model. You'll notice that very low rank LoRAs (4-8) will be only a few megabytes in size, while high rank LoRAs (128+) will be over a gigabyte, easily. Lower rank LoRAs will train more quickly (as in, orders of magnitude more quickly), but ultimately be far more limited in how they can affect the final video output.

With a lower-rank LoRA (like rank 4 or 8) you could make subtle adjustments to a person's appearance, like changing clothing color, slight facial expression changes, minor lighting adjustments. These are essentially "fine-tuning" the base model's existing capabilities in a specific direction. With a higher-rank LoRA (like rank 64 or 128) you could make more dramatic transformations, such as changing age significantly, transforming someone into a fantasy creature, or inducing major style shifts.

The other disadvantage of high-rank LoRAs is that they require much larger datasets to take advantage of. Higher rank LoRAs have much larger parameter counts than lower rank LoRAs, and an insufficient dataset size could result in overparameterization which means that the model will have more parameters than there is data available to fill it. This can result in situations like extra limbs, grotesque, unintended features, and so on.

And while there's nothing to stop you from simply duplicating your dataset to fill the requirements to properly fill those parameters, that is also a recipe for overfitting where your LoRA will struggle to generalize to subjects and will only be able to produce satisfactory results when your prompt is similar to what exists in your datasets.

So, if you have a very small dataset (like only a couple of videos) you will really have three realistic options, each with their pros and cons:

- Train a low-rank LoRA on your desired subject and use it to generate videos to augment your dataset. In some situations, this will be the only way to get more data for the dataset. The downside is that generated synthetic data, especially in video domains, may fail to include intricacies natural to motion, or may induce the model to focus and exaggerate on artifacts that you would prefer it not. This can be mitigated by extensive generation and curation of your synthetic data, with increasing time costs as your criteria grows more stringent.

- Expand the scope and theme of your LoRA where additional data may become available, which may result in compromises in what you want the LoRA to be able to generate while resulting in greater expressivity.

- Duplicate your data to a small degree. Overfitting is not a guarantee merely because you have duplicated data, it simply becomes more likely the more you have.

Batch Size

Batch size determines how many training samples are processed before the model weights are updated. This hyperparameter directly impacts both training speed and stability.

For video LoRAs, batch size takes on special significance due to the memory-intensive nature of video data. While larger batch sizes (16-64) are common in image-based LoRA training, video LoRA training often requires smaller batches (4-8) due to GPU memory constraints, especially when training with higher resolution or longer video clips.

Key considerations for batch size include:

- Memory constraints: Higher resolution videos, longer sequences, or training on consumer GPUs may necessitate smaller batch sizes

- Training stability: Larger batch sizes tend to produce more stable gradients, resulting in smoother training curves

- Generalization: Some research suggests that smaller batch sizes can lead to better generalization, though this must be balanced against training stability

- Effective batch size: When using gradient accumulation, your effective batch size is (batch size * accumulation steps)

A common strategy is to start with the largest batch size your hardware can accommodate and adjust based on training stability. If you notice highly fluctuating loss values, consider increasing the batch size (if possible) or implementing gradient accumulation to simulate a larger batch.

Dataset Balance and Augmentation

Remember that diffusion models work by understanding relationships. If you ask it to generate a video of a tiger on the moon, it's pretty unlikely that there is anything resembling this in the dataset. But it knows what a tiger is, and it knows what the moon looks like, so it's able to combine these two things in the process to create something that has never before been seen.

This will become more noticeable in low-rank LoRAs that may not be able to generalize as well; if you have a character LoRA that is, say, a woman wearing a certain kind of makeup, prompting and describing that makeup may help it to more consistently generate that character (of course, the flip side is that a LoRA that can't generalize well may not be able to generate the woman not wearing the makeup.)

The composition and preprocessing of your video dataset significantly impact LoRA quality. Consider these factors:

- Pose diversity: Include a wide range of poses, expressions, and viewing angles for subject LoRAs

- Temporal diversity: For motion-related LoRAs, include various movement speeds and patterns

- Background variety: Training with consistent backgrounds can cause the LoRA to "memorize" the environment; varying backgrounds improves generalization

- Resolution consistency: While some variation is acceptable, extreme resolution differences can bias training

Useful augmentation techniques for video LoRAs include:

- Temporal augmentations: Random frame reversal, speed changes

- Spatial augmentations: Horizontal flips, slight rotations, minimal cropping

- Color augmentations: Subtle brightness, contrast, and saturation adjustments

Be cautious with aggressive augmentations as they can introduce artifacts or diminish the very characteristics you're trying to capture. If you are looking to generate synthetic data on a person and are concerned about accuracy, you can generate a corpus of videos and then use something like Facenet to find the most accurately generated candidates; add them to your dataset, re-train the LoRA, rinse, and repeat.

Moving Forward with Your Video LoRA Training Journey

Training effective LoRAs for video models requires balancing numerous interrelated hyperparameters while maintaining a clear vision of your desired outcome. The process is inherently iterative—you'll likely need to experiment with different configurations, dataset compositions, and training durations before achieving optimal results.

Remember that different training objectives call for different approaches. A style LoRA benefits from diverse visual examples but may not require extensive motion data, whereas a character LoRA needs consistent subject representation across various poses and contexts. Motion-specific LoRAs demand careful attention to temporal diversity and frame sequencing.

As you progress in your LoRA training journey, develop a systematic approach to experimentation. Document your hyperparameter choices, training conditions, and results to build an understanding of what works for your specific goals. Take advantage of checkpoint saving to preserve promising intermediate models, and don't hesitate to restart training with adjusted parameters if you encounter persistent issues.