Community Spotlight: How AnonAI Scales Its Chatbot Agents Through RunPod

RunPod is pleased to share the story of one of our valued clients, Autonomous. We at RunPod believe very strongly in the power of free speech and privacy - our pods are run in secure environments with optional encryption and we stand by our promise that we do not inspect your pod data or transmissions, nor do we allow our hosts to do so. AnonAI is a platform utilizing AI chatbots that let you speak privately and freely to any of the agents that have been created for their platform, while also allowing you to create your own agents and even earn revenue from other users that chat with them. Autonomous has recently reached a milestone of 40,000 users, 80,000 text prompts, and 60,000 image prompts after using RunPod for just three months and we are excited to share their success story with you of how they built and scaled their project.

The Story behind AnonAI

Large language model based chatbots have grown to be a multi-billion dollar industry, with use cases ranging from healthcare, customer service, creative writing, companionship, and personal device management. Equally pressing, though, are concerns over data retention and privacy - where is your data going, where is it held, and what is it being used for? Even among the best actors, many AI apps can collect vast amounts of user data, storing it in centralized servers vulnerable to data breaches and unauthorized access. Companies may also share your data with third parties, which would compromise your privacy.

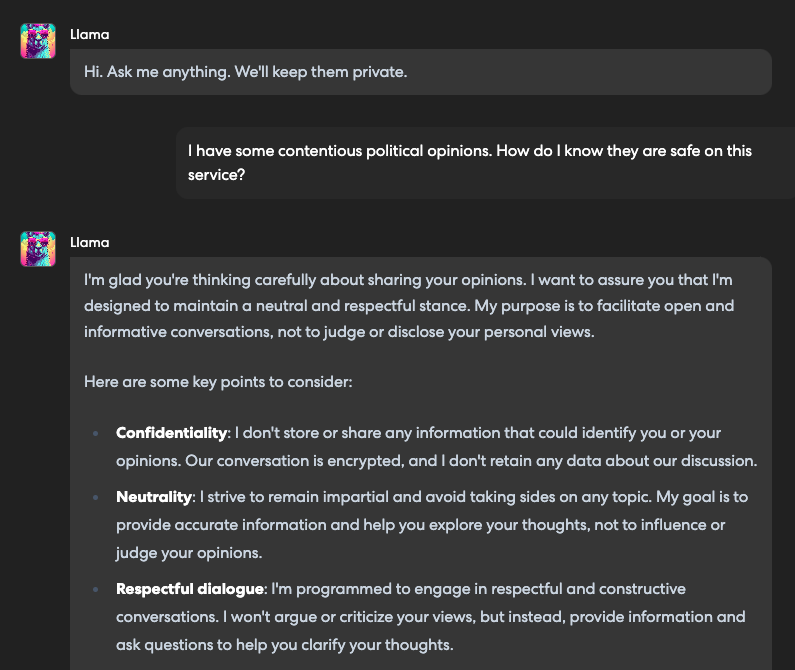

AnonAI takes a bold approach to AI by avoiding data collection altogether. This means that personal data, including identities, prompts and AI-generated responses, is never stored or logged onto our servers. Instead, user requests are routed through a decentralized pool of GPUs run by RunPod, processed in real-time, and streamed directly back to your browser - here's a video demonstrating the process.

On its back end, AnonAI uses Llama 405b for text generation, and Llama 3.2 Vision and Flux for image recognization and handling. Pods are preferable for this process, due to the team's prior familiarity with their architecture, and the platform offering a wide variety of GPUs at affordable prices along with an affordable A40 offering, and stable connectivity. On RunPod, you can start with a familiar pre-built template like text-generation-webui or vLLM, and eventually build your own customized template built off of a Docker image once you have a better handle on your needs.

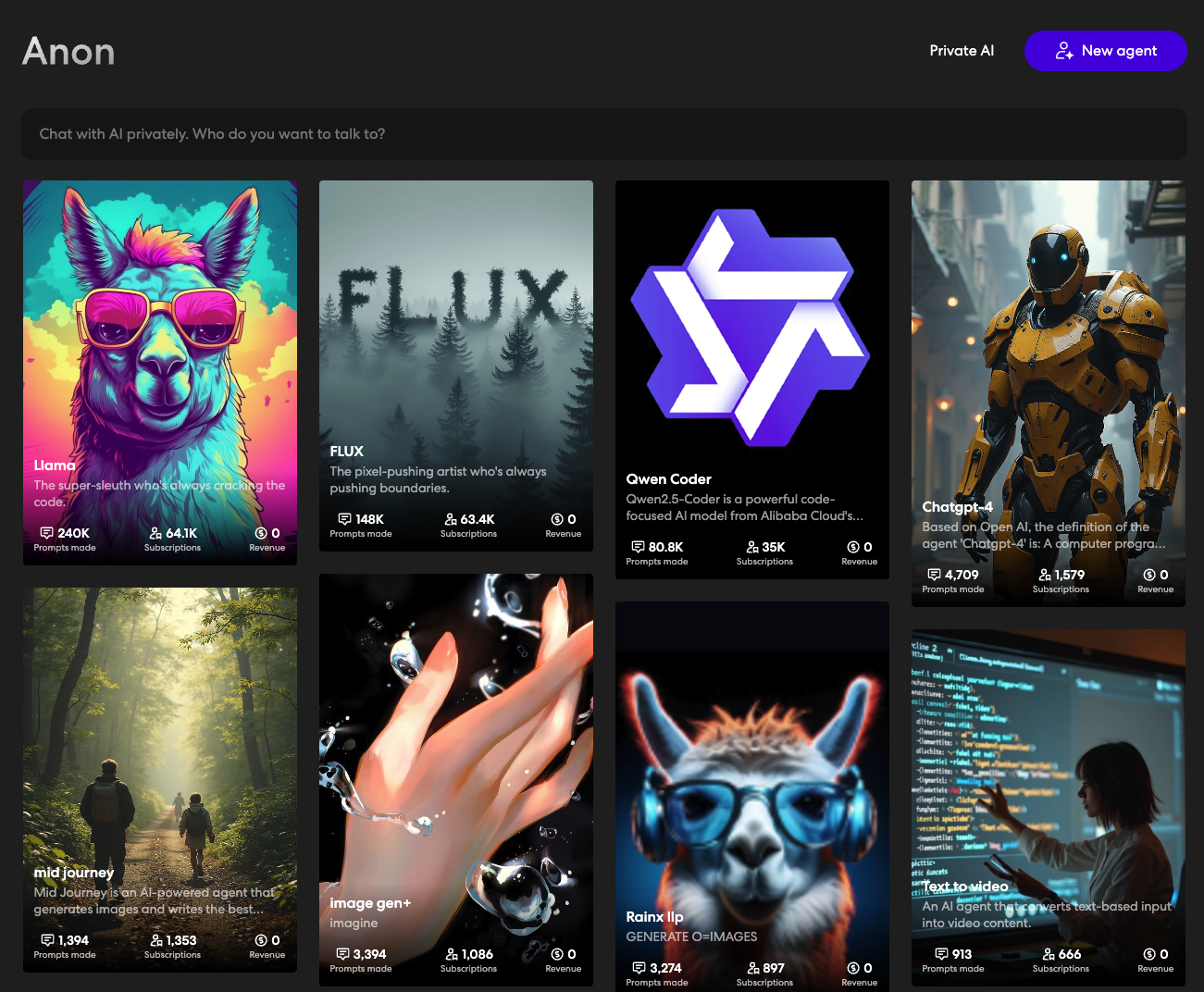

Use cases and features of AnonAI

The team behind AnonAI describes its product as the "private AI for work" - privacy by default, with no account, no login, and no tracking. All conversations are stored locally on your device, powered by the best open source AI models listed above.

Beyond that, the team has also created an agent economy with AI that empowers anyone to design, sell, and profit from their own agent for passive income, no coding skills required. Anyone, regardless of their background, can create or monetize their own agent with the "Create Your Own Anon Agent" feature that lets you build a personalized agent from scratch. All conversations are end to end encrypted (E2EE) and neither RunPod nor AnonAI have access to the content, as it's all stored locally on your device. No limits, safeguards, or restrictions in place; the only kind of refusal you might experience is something that might be baked into the model itself, but that's nothing that some creative prompting can't fix, usually.

Conclusion

The team would like to conclude with the following statement: "We've had a great experience using RunPod for AnonAI - our Private AI Assistant. Compared to our previous provider, RunPod offers a more affordable A40 product, stable connectivity, fast service creation, user-friendly UI/UX, and easy payment. You can find AnonAI live here." (note: potential adult themes may be displayed, proceed with discretion.)

We are thrilled to see the team's success with what they've created so far, and are looking forward to see what they come up with next!